I had an idea for an application that would be using some native code, but also needed to be customizable through changing JavaScript. v8 was the first choice for a JavaScript engine to integrate. It is the most popular JavaScript engine. Having modified Chromium before, (V8 is part of the Chromium source code) I thought this would be more of the same procedures that I had followed before. That’s not the case. The last time I worked with this code, it was with Microsoft Visual C/C++. But back in September 2024 the V8 group followed Chromium’s lead and removed support for MSVC. The change makes sense, they wanted to reduce the various compiler nuances and hacks that they had to account for when updating the source code. The old procedure I used was not going to work. I had to figure out how to build V8 again.

Appreciation for the V8 Team

I want to take a moment to thank the V8 team for their effort. I’ve not interacted with them directly myself. But from reading in the Google Group for V8, I’ve seen that they’ve been responsive to questions that others have asked, and I’ve found their responses helpful. If/when I do interact with them directly I want to remember to express my appreciation. If you interact with them, I encourage doing the same.

Why doesn’t Google Just Distribute a Precompiled Version

The first time I used V8, I questioned why Google didn’t just make a precompiled version available. After working in it myself, I can better appreciate why one might not want to do that. There are a log of variations in build options. It simply just isn’t practical.

The Build Script

Because the build procedure is expected to change over time, I’ve made the rare decision to call out the V8 version that I’m working with in the title of this post. This procedure might not work with earlier or later versions of V8. Consider what version of v8 that you wish to build. The more significant the difference in that version number and what I’ve posted here (13.7.9) the higher the chance of this document being less applicable.

As I did with the AWS C++ SDK and the Clang compiler, I wanted to script the compilation process and add the script to my developer setup scripts. The script is in a batch file. While I would have preferred to use PowerShell, the build process from Google uses batch files. Yes, you can call a batch file from PowerShell. But there are differences in how batch files execute from PowerShell vs the command prompt.

Installing the Required Visual Studio Components

If you are building the V8 source code, you probably already have Visual Studio 17 20222 installed with C++ support. You’ll want to add support for the Clang compiler and additional tools. While you could start the Visual Studio installer and select the required components, in my script I’ve included a command to invoke the installer with those components selected. You’ll have to give it permission to run. If you want to invoke this command yourself to handle putting the components in place, here it is.

pushd "C:\Program Files (x86)\Microsoft Visual Studio\Installer\"

vs_installer.exe install --productid Microsoft.VisualStudio.Product.Community --ChannelId VisualStudio.17.Release --add Microsoft.VisualStudio.Workload.NativeDesktop --add Microsoft.VisualStudio.Component.VC.ATLMFC --add Microsoft.VisualStudio.Component.VC.Tools.ARM64 --add Microsoft.VisualStudio.Component.VC.MFC.ARM64 --add Microsoft.VisualStudio.Component.Windows10SDK.20348 --add Microsoft.VisualStudio.Component.VC.Llvm.Clang --add Microsoft.VisualStudio.Component.VC.Llvm.ClangToolset --add Microsoft.VisualStudio.ComponentGroup.NativeDesktop.Llvm.Clang --includeRecommended

popd

Depot Tools

In addition to the source code, Google makes available a collection of tools and utilities that are used in building V8 and Chromium known as “Depot Tools.” These tools contain a collection of executables, shell scripts, and batch files that help abstract away the differences in operating systems, bringing the rules and procedures to be closer together.

Customizing my Script

For the script that I’ve provided, there are a few variables in it that you probably want to modify. The drive on which the code will be downloaded, the folders into which the code and depot tools will be placed, and the path to a temp folder are all specified in the batch file. I’ve selected paths that result in c:\shares\projects\google being the parent folder of all of these, with the v8 source code being placed in c:\shares\projects\google\v8. If you don’t like paths, update the values that are assigned to drive, ProjectRoot, TempFolder, and DepotFolder.

Running the Script

The Happy Path

If all goes well, a developer opens their Visual Studio Developer Command Prompt, invokes the script, and is presented with the Visual Studio Installer UI a few moments later. The user would OK/Next through the isntaller. After that, the Windows SDK isntaller should present and the user does the same thing. The user could then walk away and when they come back, they should have compiled V8 libraries for debug and release modes for x64 and ARMS64.

A walkthrough of what happens

The script I provided must be run from a Visual Studio Developer command prompt. Administrative level priviledges is not needed for the script, but it will be requested during the application of the Visual Studio changes. Because elevated processes don’t run as a child process of the build script, the script has no way of knowing when the isntallation completes. It will pause when the Visual Studio Installer is invoked and won’t continue until the user presses a key in the command window. Once the script continues, it will download the Windows SDK and invoke the installer. Next, it clones Depot Tools folder from Google. After cloning Depot Tools, the application gclient needs to be invoked at least once. Thsi script will invoke it.

With gclient initialized, it is now invoked to download the V8 source code and checkout a specific version. Then the builds get kicked off. The arguments for the builds could be passed as command line argumens, or they could be placed in a file named args.gn. I’ve placed configuration files for the 4 build variations with this build script.

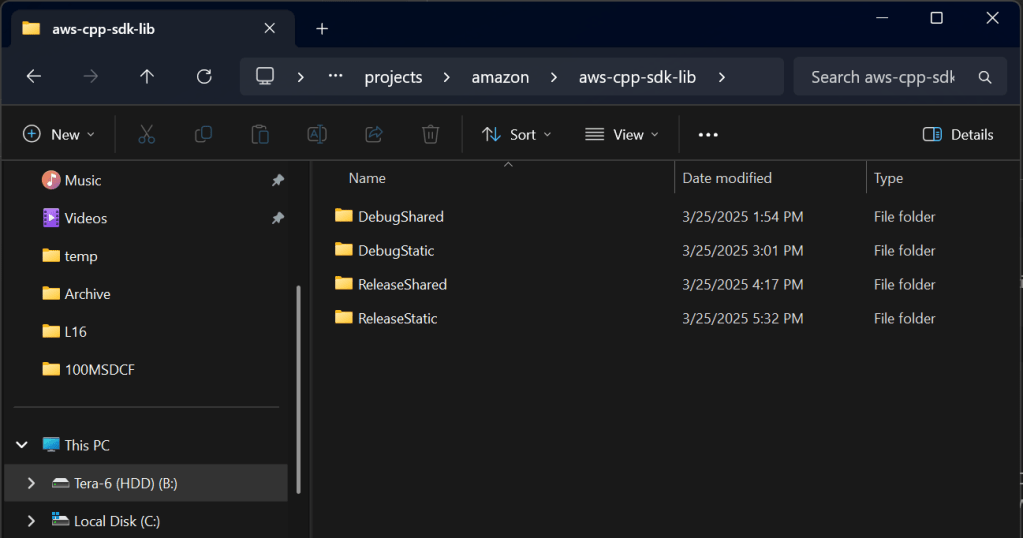

V8 Hello World

Just as I did with the AWS C++ SDK script, I’ve got a “Hello World” program that doesn’t do anything significant. It’s purpose is to stand as a target for validating that the SDK successfully compiled and that we can link to it. The Hello World source is frp, pme pf the programs that Google provides. I’ve placed it in a Visual Studio project. If you are using same settings that I used in my build script, you will be able to compile this program without making any modifications. Nevertheless, I’ll explain what I had to do.

// v8monolithlinktest.cpp : This file contains the 'main' function. Program execution begins and ends there.

//

#include <libplatform/libplatform.h>

#include <v8-context.h>

#include <v8-initialization.h>

#include <v8-isolate.h>

#include <v8-local-handle.h>

#include <v8-primitive.h>

#include <v8-script.h>

int main(int argc, char** argv)

{

v8::V8::InitializeExternalStartupData(argv[0]);

std::unique_ptr<v8::Platform> platform = v8::platform::NewSingleThreadedDefaultPlatform();

v8::V8::InitializePlatform(platform.get());

v8::V8::Initialize();

v8::Isolate::CreateParams create_params;

v8::V8::Dispose();

v8::V8::DisposePlatform();

delete create_params.array_buffer_allocator;

return 0;

}

I made a new C++ Console program in Visual Studio. The program needs to know the folder that has the LIB file and header files. The settings for binding to the C/C++ runtime must also be consistent between the LIB and out program. I will only cover configuring the program for debug mode. Configuring for release will involve different values for a few of the settings.

Right-click on the project and select “Properties.” Navigate to the options C++ -> Command Line on the left On the text box on the right labeled Additional Options enter the argument /Zc:__cplusplus (that command contains 2 underscores). This is necessary because, for compatibility reasons, Visual Studio will report as using an older version of C++. The V8 source code has macros within it that will intentionally cause the compilation to fail if the compiler doesn’t report as having C++ 20 or newer. Now, go to the setting C++ -> Language -> C++ Language Standard. Change it to C++ 20. Go to C++ -> General -> Additional Include Directories. In the drop-down on the right side, select “Edit.” Add a new path. If you’ve used the default settings, the new path will be c:\shares\projects\google\v8\include. Finally, go to C++ -> Linker -> General. For “Additional Library Directories” select the dropdown to click on the “Edit” option. Enter the path c:\shares\projects\google\v8\out\x64.debug.

With those settings applied, if you compile now the compilation will fail. Let’s examine the errors that come abck and why.

Unresolved External Symbols

You might get Unresolved External symbol errors for all of the V8 related functions. Here is some of the error output.

v8monolithlinktest.obj : error LNK2019: unresolved external symbol “class std::unique_ptr> __cdecl v8::platform::NewSingleThreadedDefaultPlatform(enum v8::platform::IdleTaskSupport,enum v8::platform::InProcessStackDumping,class std::unique_ptr>)” (?NewSingleThreadedDefaultPlatform@platform@v8@@YA?AV?$unique_ptr@VPlatform@v8@@U?$default_delete@VPlatform@v8@@@std@@@std@@W4IdleTaskSupport@12@W4InProcessStackDumping@12@V?$unique_ptr@VTracingController@v8@@U?$default_delete@VTracingController@v8@@@std@@@4@@Z) referenced in function main

1>v8monolithlinktest.obj : error LNK2019: unresolved external symbol “public: __cdecl v8::Isolate::CreateParams::CreateParams(void)” (??0CreateParams@Isolate@v8@@QEAA@XZ) referenced in function main

These are because you’ve not linked to the the necessary V8 library. This can be resolved through the project settings or through the source code. I’m going to resolve it through the source code with preprocessor directives. The #pragma comment() preprocessor maco is used to link to LIB files. Let’s link to v8_monolith.lib by placing this somewhere in the cpp files.

#pragma comment(lib, "v8_monolith.lib")

If you compile again, you’ll still get an unresolved externals error. This one isn’t about a V8 function, though.

1>v8_monolith.lib(time.obj) : error LNK2019: unresolved external symbol __imp_timeGetTime referenced in function "class base::A0xE7D68EDC::TimeTicks __cdecl v8::base::`anonymous namespace'::RolloverProtectedNow(void)" (?RolloverProtectedNow@?A0xE7D68EDC@base@v8@@YA?AVTimeTicks@12@XZ)

1>v8_monolith.lib(platform-win32.obj) : error LNK2001: unresolved external symbol __imp_timeGetTime

1>C:\Users\Joel\source\repos\v8monolithlinktest\x64\Debug\v8monolithlinktest.exe : fatal error LNK1120: 1 unresolved externals

The code can’t find the library that contains the function used to get the time. Linking to WinMM will take care of that. We another an other #pragma comment() preprocessor directive.

#pragma comment(lib, "WinMM.lib")

Here’s another compiler error that will be repeated several hundred times.

1>libcpmtd0.lib(xstol.obj) : error LNK2038: mismatch detected for '_ITERATOR_DEBUG_LEVEL': value '0' doesn't match value '2' in v8monolithlinktest.obj

The possible range for _ITERATOR_DEBUG_LEVEL is from 0 to 2 (inclusive). This error is stating that the V8 LIB has this constant defined to 0 while in our code, it is defaulting to 2. We need to #define it in our code before any of the standard libraries are included. It is easiest to do this at the top of the code. I make the following the first line in my source code.

#define _ITERATOR_DEBUG_LEVEL 0

The code will now compile. But when you run it, there are a few failures that you will encounter. I’ll just list the errors here. The code terminates when it encounters one of these errors. You would only be able to observe one for each run. The next error would be encountered after you’ve addressed the previous one. These failures are from the code checking to ensure that your runtime settings are compatible with the compile time settings. Some settings can only be set at compile time. If the V8 code and your code have diffeerent expectations, there’s no way to resolve the conflic. Thus the code fails to force the developer to resolve the issue.

Embedder-vs-V8 build configuration mismatch. On embedder side pointer compression is DISABLED while on V8 side it's ENABLED.

Embedder-vs-V8 build configuration mismatch. On embedder side V8_ENABLE_CHECKS is DISABLED while on V8 side it's ENABLED.

These are also resolved by #define directives before the relevant includes. These values must be also be consistent with values that were used when compiling the V8 library. The lines that resolve these errors follow.

#define V8_COMPRESS_POINTERS

#define V8_ENABLE_CHECKS true

I’ve mentioned a few times values for options within the V8 library. Those values come from the arguments that were passed when V8 was built. Let’s take a look at one of the args.gn files that contains these arguments.

dcheck_always_on = false

is_clang = true

is_component_build = false

is_debug = true

symbol_level=2

target_cpu = "x64"

treat_warnings_as_errors = false

use_custom_libcxx = false

# use_glib = true

# v8_enable_gdbjit = false

v8_enable_i18n_support = true

v8_enable_pointer_compression = true

v8_enable_sandbox = false

v8_enable_test_features = false

v8_monolithic = true

v8_static_library = true

v8_target_cpu="x64"

v8_use_external_startup_data = false

# cc_wrapper="sccache"

I won’t explain everythin within these settings, but there are a few items to call out.

v8_monolith– this option causes all of the functionality to be compiled into a single lib.- use_custom_libxx – when

true, the code will use a custom C++ library from google. When false, the code will use a standard library. Always set this to false. is_debug– set totruefor debug builds, andfalsefor release buildsv8_static_library– When true, the output contains libs to be statically linked to a program. When false, dlls are produced that must be distributed with the program.

Many of these settings have significant or interesting impacts. The details of what each one doesn’t isn’t discussed here. I’m assuming that most people that are reading this are just getting started with V8. The details of each of these build options might not be at the top of your list if you are just getting started. For some of these settings, Google has full page documents on what the settings do. The two most important settings are the v8_monolith and the is_debug setting. v8_monolith will package all of the functionality for v8 in a single large lib. The one I just compiled is about 2 gigabytes. If this option isn’t used, then the developer must makes sure that all of the necessary DLLs for the program are collected and deployed with their program.

Enabling is_debug (especially with a symbol level of 2) let’s you step into the v8 code. Even if you trust that the v8 code works fine, it is convinient to be able to step into v8.

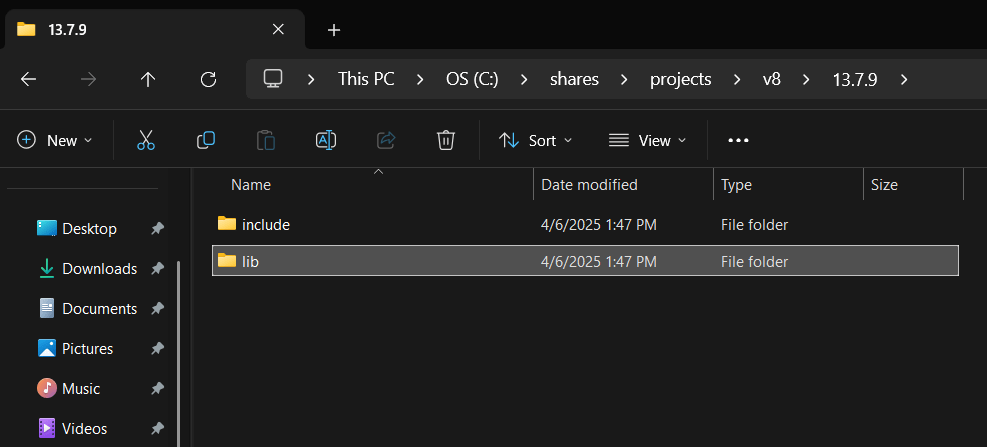

Distributing the Outputs

After you’ve made a build and are happy with it, you want to distribute it to either other developers or archive it for yourself. Since this example makes the monolithic build, the only files that are needed are a single lib file (though very large) and the header files. You can find the V8 libs in in v8\out\x64.release\v8_monolith.lib and v8\out\x64.debug\v8_monolith.lib. Note that these files have the same name and are aonly separated by their folder. When you archive the lib, you may want to archive the args.gn file that was used to make it. It can serve as documentation for a developer using the lib. You also need the include folder from v8\includes. That’s all that you need. Because I might want to have more than one version of the V8 binaries on my computer, I’ve also ensured that the version number is also part of the file path.

Finding Resources

I looked around to try to find a good book on the V8 system, and I can’t find any. It makes sense why there are no such books. It is a rapidly evolving system. The best place I think you will find for support is the V8 Google Groups. Don’t just go there when you need help, it may be good to randomly read just to pick up information you might not have otherwise. There is also v8.dev for getting a great surface level explanation of the system. Note that some of the code in the examples on their site are a bit out-of-date. I tried a few and found that some minor adjustments are needed for some code exables to work.

Posts may contain products with affiliate links. When you make purchases using these links, we receive a small commission at no extra cost to you. Thank you for your support.

Mastodon: @j2inet@masto.ai

Instagram: @j2inet

Facebook: @j2inet

YouTube: @j2inet

Telegram: j2inet

Bluesky: @j2i.net